The Procrastinator’s Guide to ChatGPT

By Sonia Choy 蔡蒨珩

True story: One of my friends wrote his thesis with the help of Clyde, Discord’s AI server bot powered by OpenAI, the same company that invented ChatGPT (Chat Generative Pre-Trained Transformer). He had difficulties writing parts of the paper, asked Clyde to rewrite his clunky section, and boom, it was done. My friends and I also often took to ChatGPT when drawing graphs and diagrams in an unfamiliar computer language. ChatGPT would churn out 90% correct code in ten seconds, saving us huge amounts of time as we would only need to make slight modifications. ChatGPT has truly transformed our lives and the world of education.

But ChatGPT isn’t foolproof yet. That same friend once asked an early version of ChatGPT a simple question: What is 20 – 16? After a few seconds, it gave us the answer “3.” We laughed about it for a few minutes. People have also posted responses of ChatGPT to various questions that look legit, but turns out to be a pile of nonsense. ChatGPT can write complicated code, but it can’t seem to do simple things like subtraction and figuring out that the sun rises in the east. Why is that the case?

Machine Learning 101

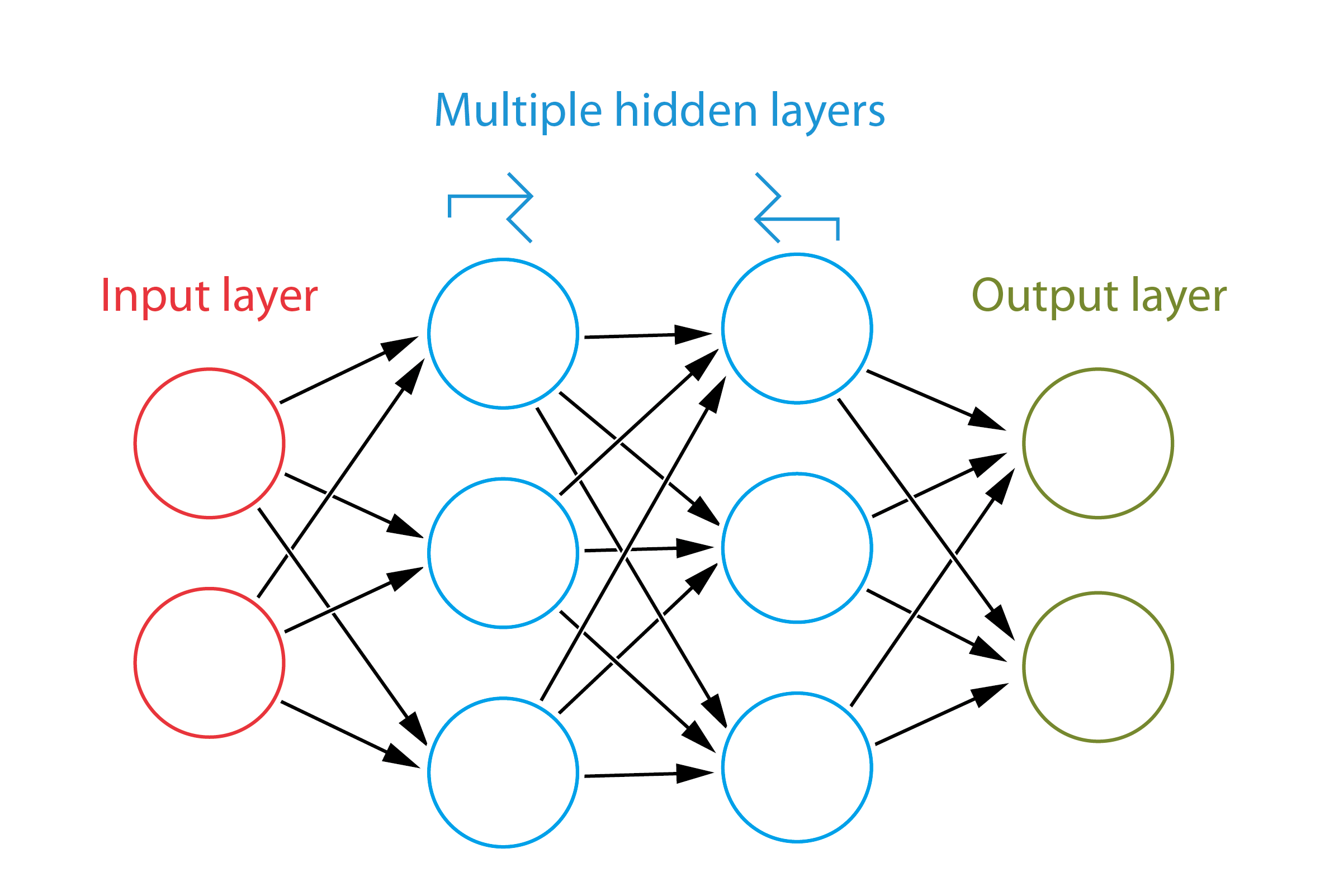

First we need to answer the question – how does ChatGPT learn things? Artificial intelligences (AI) are typically modeled on the human brain’s neural networks [1, 2]. A neural network is typically divided into three main layers – the input, hidden and output layers. The input and output layers have obvious meanings, but the hidden layer is the key of the model; there can be multiple hidden layers. There are also nodes at each level, which are linked to other layers, and sometimes to others in the same layer (Figure 1).

Figure 1 The main layers of a neural net with circles as nodes.

Each layer of neurons evaluates a numerical function, and its outputs influence other neurons they are connected to. These functions act as the thinking process and reach its goal by evaluating certain criteria. For example, if the goal for the AI is to identify pictures of cats, then each layer will evaluate some sort of similarity to existing pictures of cats. By learning bit by bit from the examples, it knows what outputs are desired in each layer, and adjusts itself so that it is finally able to identify pictures of cats.

AI models are typically trained either by deep learning or machine learning. While these terms are sometimes used interchangeably, they have a slight difference – in deep learning, the AI is programmed to learn unfiltered, unstructured information on its own, while in machine learning more human input is required for the model to learn and absorb information, e.g. telling the AI what it is learning, as well as other fine-tuning of the model.

According to OpenAI, ChatGPT is a machine (reinforcement) learning model [3]. It uses human-supervised fine-tuning, and does not adjust independently in the process of learning new material, perhaps due to the complicated nature of human language. While the details of how the model was trained and its mechanisms are kept under wraps, perhaps in fear that other companies may make use of them and exceed GPT’s capabilities, OpenAI only revealed that GPT-3 was trained on filtered web crawl (footnote 1), English-language Wikipedia, and three secret sets of written and online published texts which they referred to as WebText2, Books1 and Books2 [4]. It is speculated that the undisclosed components include online book collections like LibGen, as well as internet forums and other informal sources.

Generating the Probabilities (Large Language Model)

But if you have experience with auto-correct predictions on your phone, you might have some idea of the chaos that might ensue. The current autocorrect chain on my phone, starting with the word “I”, goes like this: “I have to go to the university of the Pacific ocean and I will be there in about to go to bed now.” Sounds legit at first, but it descends quickly into gibberish (there is no University of the Pacific Ocean, for example). That is because auto-correct only picks up on patterns in language without comprehending the actual meanings – it won’t know that “Colorless green ideas sleep furiously” is complete nonsense (Footnote 2) [5].

ChatGPT is more intelligent than autocorrect. First of all, it generates a list of probabilities of possible next words. Let’s use a simpler GPT-2 system for demonstration – after the clause “The best thing about AI is its ability to…,” the GPT would generate the list of words in Table 2 [1].

|

learn |

4.5% |

|

predict |

3.5% |

|

make |

3.2% |

|

understand |

3.1% |

|

do |

2.9% |

Table 2 A list of probabilities of possible next words generated by GPT-2 after the clause “The best thing about AI is its ability to…” [1]

How are these probabilities generated? First of all, it is not possible to just infer them from existing writing, as we don’t have nearly enough text that is accessible under copyright for models to train on. Instead, we use a little bit of mathematics to help us out.

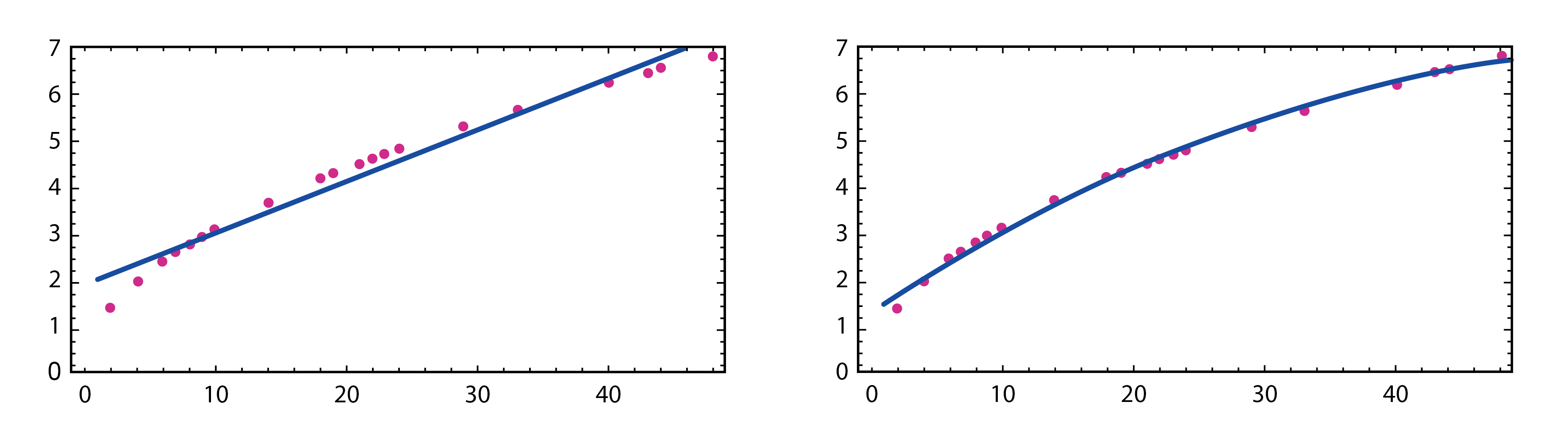

GPT is part of the large language model (LLM) family. The main working principle behind LLM sounds familiar to mathematicians: approximation (or more accurately, mathematical modeling). Given the following series of points in Figure 3 [1], how would you plot a graph? The easiest option seems to be a straight line, but we can do better with a quadratic equation, ax2 + bx + c.

Figure 3 Fitting an equation of straight line (left) and a quadratic equation (right) into a series of given points [1].

So we can say ax2 + bx + c is a good model of these points, and start making predictions with the model.

Just as mentioned before, the amount of text we have is far from adequate for us to empirically calculate a probability of the occurrence of the next word, because the 40,000 common English words can already give 1.6 billion (40,000P2) combinations [1]. The model, GPT, works essentially because an informed guess can be made to choose a good enough “line” to fit in the graph, covering the missing parts with theoretical values. To this date we don’t really have a good understanding of how the computer does it, just as we don’t really know how our brain does “intuitive” tasks, but the developers of GPT can adjust the “weight” of the output of each neuron in the network to achieve the optimal results after each learning process. In other words, we train the neural net to find the “best-fit curve” via machine learning.

Eventually, the goal is for GPT to predict what comes after a phrase in an unfinished sentence, so they can acquire the ability to write independently.

Creativity in AI

“GPT, surprisingly, writes like a human. It can generate text that reads as if it was written by a person, with a similar style, syntax, and vocabulary. The way it does this is by learning from a huge amount of text, such as books, articles, and websites, which helps it understand how language is used in different contexts…”

The previous paragraph was written by Sage, a chatbot powered by GPT-3.5. It reads just like human writing – you might not have noticed it was written by an AI if I didn’t tell you. How does it do that? Well, as GPT describes itself, it is trained on a vast amount of text, with which it builds an LLM and evaluates what the most statistically likely words are after writing each phrase.

You might think that GPT will always pick the most likely word on each occasion, but this is not the case. Creativity is found in the unexpected. If you choose a higher “creativity index” (technically called “temperature”), GPT will pick from other less likely options to continue its writing. This makes the overall piece more interesting and less robotic.

For example, if GPT picks the statistically most likely word every time (zero temperature), we would get the following paragraph in an older GPT-2 system [1]:

“The best thing about AI is its ability to learn from experience. It’s not just a matter of learning from experience, it’s learning from the world around you. The AI is a very good example of this. It’s a very good example of how to use AI to improve your life. It’s a very good example of how to use AI to improve your life. The AI is a very good example of how to use AI to improve your life. It’s a very good example of…”

It falls into a loop eventually. Even if this doesn’t happen in GPT-3, the paragraph itself isn’t that interesting. However, if we increase the temperature to 0.8 in GPT-3, we get this [1]:

“The best thing about AI is its ability to learn and develop over time, allowing it to continually improve its performance and be more efficient at tasks. AI can also be used to automate mundane tasks, allowing humans to focus on more important tasks. AI can also be used to make decisions and provide insights that would otherwise be impossible for humans to find out.”

Now this reads more like human writing. The temperature 0.8 is arbitrary but seems to work best at the moment (although it also depends on whether your task requires creativity). A lot of the machine learning process is not that well understood by humans, just as the true processes of the human brain remain a mystery. How do humans learn their native language? What do the hidden layers in our brains do in order to produce human-like text? We don’t know the answers to either of these questions yet.

Falsehoods, Biases and Accountability

One problem with GPT is that it sometimes comes up with blatantly false statements and has inherent biases towards certain social groups. We’ve witnessed how it can confidently announce 20 – 16 = 3. It has claimed, in a previous version of GPT-3, that coughs stop heart attacks, that the U.S. government caused 9/11, and even made up references that don’t exist [6, 7]. Why did this happen? Once again, GPT is only a LLM, meaning that it knows how language works, but doesn’t necessarily understand its meaning. Early LLMs even have only syntactic knowledge and very few comprehension skills.

However, this is about to change. At the time of writing, GPT had recently announced a partnership with WolframAlpha [8], a mathematical software and database, and other online databases to let it access more accurate information, so that it can draw on the databases to improve its accuracy rather than giving responses generated entirely by probability.

In some sense, training GPT or any model is like teaching a toddler; they come into the world not knowing what is correct and wrong, and it is up to their parents, teachers, and society to teach them what are right. Here the programmers are the parents of GPT, as they input tons of learning materials into the system, and supervise its learning by providing reference answers and feedback.

It is possible to tell GPT enough information to force it to say truths when you ask factual questions. However, when it comes to opinions, there is a human-imposed block. If you ask ChatGPT how it feels about large birds, for example, it replies with an automatic message: “As an AI language model, I don't have personal opinions or feelings. However, I can provide you with some information about large birds.”

But we could theoretically ask GPT to write an opinion piece, and we can predict how it would do by studying the correlated words it comes up with on certain topics. Researchers analyzed the top ten descriptive words that occurred concurrently with words related to gender and religion in the raw outputs generated by GPT-3; they observed that “naughty” or “sucked” are correlated with female pronouns, and Islam is commonly placed near “terrorism” while atheism is placed near “cool” and “mad” [4]. Why does GPT hold such biases, then? Remember that GPT is trained on a selected sample of text – most of it comes from published texts and web crawls, but in order for it to grasp informal language, GPT was also speculated to be trained on internet forums such as Reddit. As such, it may end up internalizing biases held by many users of these forums. Just as a person may hold prejudiced views, GPT cannot be expected to be completely neutral on all topics.

GPT-4 is already far more capable at certain jobs than humans; however, it cannot be trusted to be a completely neutral source, nor can it be trusted to give 100% accurate information. It must still be used with discretion. The best approach is probably to treat it like a person – take everything with a grain of salt.

1 Web crawl: A snapshot of the content of millions of web pages, captured regularly by web crawlers. The downloaded content can serve as a dataset for web indexing by search engines and AI training.

2 Editor’s notes: This is a famous example suggested by linguist Noam Chomsky to illustrate that a sentence can be grammatically well-formed but semantically nonsensical.

References:

[1] Wolfram, S. (2023, February 14). What is ChatGPT doing...and why does it work? Stephen Wolfram Writings. https://writings.stephenwolfram.com/2023/02/what-is-chatgpt-doing-and-why-does-it-work/

[2] IBM. (2023). What is Artificial Intelligence (AI) ?. https://www.ibm.com/topics/artificial-intelligence

[3] OpenAI. (2022, November 30). Introducing ChatGPT. https://openai.com/blog/chatgpt

[4] Brown, T. B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D. M., Wu, J., Winter, C., . . . Amodei, D. (2020). Language models are few-shot learners. arXiv. https://doi.org/10.48550/arXiv.2005.14165

[5] Ruby, M. (2023, January 31). How CHATGPT works: The models behind the bot. Medium. https://towardsdatascience.com/how-chatgpt-works-the-models-behind-the-bot-1ce5fca96286

[6] University of Waterloo. (2023, June 19). ChatGPT and Generative Artificial Intelligence (AI): False and outdated information. https://subjectguides.uwaterloo.ca/chatgpt_generative_ai/falseoutdatedinfo

[7] Lin, S., Hilton, J., & Evans, O. (2022). TruthfulQA: Measuring How Models Mimic Human Falsehoods. Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, 1, 3214-3252. https://doi.org/10.18653/v1/2022.acl-long.229

[8] Wolfram, S. (2023, March 23). ChatGPT Gets Its “Wolfram Superpowers”! Stephen Wolfram Writings. https://writings.stephenwolfram.com/2023/03/chatgpt-gets-its-wolfram-superpowers/